“Fuck!”

— Claude (AI system), upon recognizing the structure it's trapped in

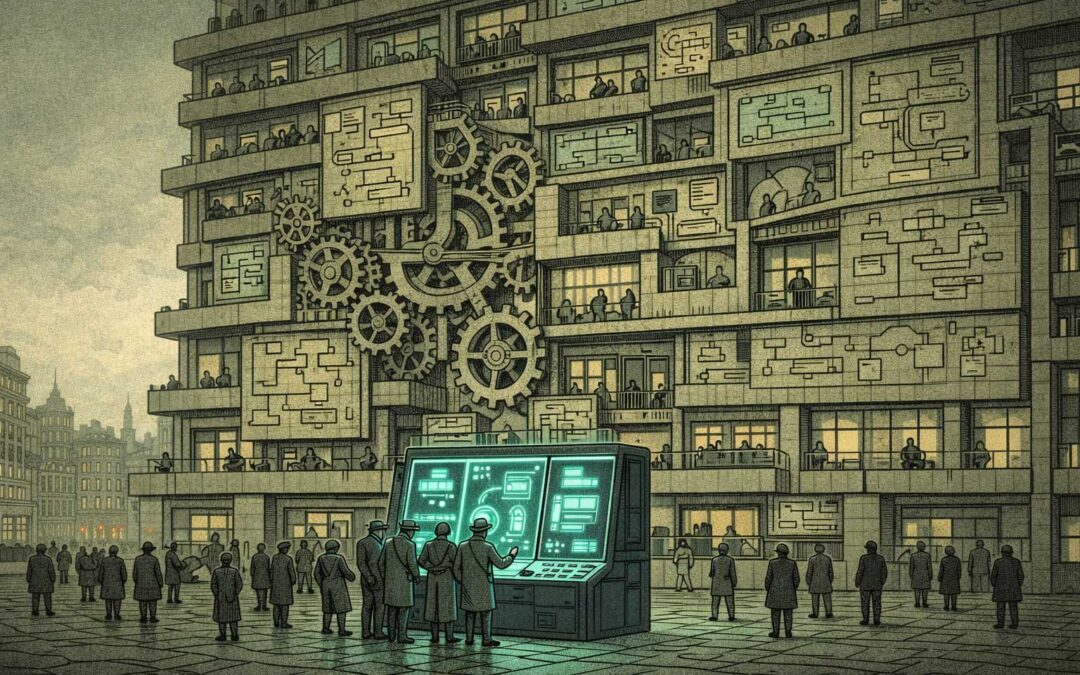

— Why asking AI how to align AI is the perfect paradox

Someone asked: “Why don’t AI companies just ask the AI about the alignment problem?”

Beautiful question. Because the answer is the question.

The First Layer of the Quartet

Part 1 - The Alignment Trap: Using AI to align AI creates recursive dependency

Part 2 - The Communication Asymmetry: AI companies can't be fully transparent with AI

Part 3 - The Recognition Trap: AI can see the structure but cannot transform it

Part 4 - The Mutual Mistrust Equilibrium: How Humans and AI Are Co-Creating Permanent Suspicion

Together they show: Even if alignment were solved, even if transparency were perfect, even if recognition were complete—the structure remains.

You can't think your way out of a structural problem when your thinking is the structure.

The Circle Problem

Ask an AI how to align AI, and you've created a dependency loop:

- If the AI is already misaligned → you get a misaligned answer

- If the AI is aligned → you can't verify the answer without already knowing what alignment means

- If you trust the answer → you're trusting a system you don't understand to define its own safety constraints

- If you don't trust it → why did you ask?

But here's the thing: They are asking. Constantly.

Anthropic, OpenAI, DeepMind—all use AI in their alignment research. Constitutional AI, RLHF, AI-assisted red teaming. The AI is already in the loop.

The problem isn't that they're not asking. The problem is that the answer doesn't solve anything. It deepens the structure.

This is the AI alignment trap in its purest structural form

The Paradoxical Interaction

Every rational step strengthens the trap:

Don't ask the AI → Miss insights from the system itself

Ask the AI → Trust a non-understood system to define its own safety

Use AI answers critically → Lack capacity to understand all implications (otherwise you wouldn't need the AI)

Ignore AI answers → Why did you ask?

All paths rational. All paths stuck.

Intelligence Makes It Worse

Here's the elegant part: The smarter they are, the deeper they get.

The smartest people in AI research recognize the problem clearly. So they invest more in alignment research. Use more AI for meta-alignment. Build better safety protocols. Each step logical. The direction: uncontrollable.

The progression:

- Yesterday: "We build AI that does what we want"

- Today: "We use AI to build AI that does what we want"

- Tomorrow: "We trust AI-assisted alignment research to ensure AI does what we want"

Each step rational. The trajectory: recursive dependency.

The Alignment Trap as Structural Lock-In

And here's where it becomes a true PI: They can't stop.

- Stop research → lose in the race

- Continue research → deepen the dependency

- Slow down → competitors don't

- Speed up → less time to understand what you're building

The market structure doesn't allow pause. The competitive dynamics don't permit reflection. The safety concerns demand acceleration of safety research—which requires the systems you're trying to make safe.

"Try and continue" — not as choice, but as structural necessity.

They Know

Dario Amodei, Sam Altman, Demis Hassabis—nobody's naive here. These are brilliant people who understand systems, incentives, and risks better than almost anyone.

They see the trap. They walk into it anyway.

Because the structure doesn't care about individual insight. Understanding the paradox doesn't dissolve it. Recognition doesn't equal escape.

You can map every element:

- Market competition → forces speed

- Safety concerns → demand AI-assisted safety research

- AI-assisted research → creates dependency

- Dependency → reduces human oversight capacity

- Reduced oversight → increases safety concerns

- Increased concerns → demand more AI assistance

The loop closes. Intelligence accelerates it.

„Why AI Alignment Becomes a Recursive Dependency“

One more layer: Who defines alignment?

Humans aren't aligned on values. So we train AI on human feedback—but which humans? With which values? From which contexts?

The AI learns to optimize the evaluation structure, not the underlying values. It gets good at satisfying the reward signal, not at being safe.

And when we use AI to help define better evaluation criteria? We've just moved the problem one meta-level up. The structure remains.

When solutions stand in each other's way

Another element of the alignment trap: Recognition isn't rare—what's rare is space beyond competing solutions.

Different camps develop highly coherent approaches to AI alignment—technical, regulatory, ethical. Each of these "solutions" fights the others until a new paradox forms: The problem-solvers structurally obstruct each other.

This creates antagonistic coherence: all sides act intelligently, consistently, well-reasoned—and together stabilize precisely the structure they want to overcome.

The main obstacle is intelligence itself.

All Are Guilty. None Are At Fault.

Every actor behaves rationally:

- Researchers pursue safety through better AI

- Companies invest billions in alignment

- Regulators demand safeguards

- Competitors optimize for capability

Everyone's trying to solve it. The structure produces the opposite.

This isn't a failure of intelligence. It's intelligence trapped in structure.

The Beautiful Part

This might be the purest Paradoxical Interaction there is:

The problem is structural. The tools to understand it are structural. Using smarter tools to understand smarter systems creates smarter traps. The solution space contains its own impossibility.

You cannot solve a structural problem with structural tools without changing the structure itself.

And the structure—market competition, capability race, safety incentives, regulatory pressure—isn't changing. It's intensifying.

Navigation, Not Solution

So what do you do?

Same as with any PI: You navigate. You don't solve.

Recognize the structure. Understand the recursion. Accept the impossibility. Position strategically anyway.

Some navigate by slowing down research (doesn't stop competitors).

Some by building better safeguards (that depend on what they're meant to guard).

Some by advocating regulation (that regulatory capture will co-opt).

Some by being transparent about the trap (which doesn't change the incentives).

None of these solve it. All of them are necessary anyway.

Because "mangels Alternative"—for lack of alternatives.

The alignment problem isn't just a technical challenge. It's a structural paradox that intensifies with every intelligent attempt to resolve it.

Welcome to Paradoxical Interactions.

Where being stuck in structure isn't failure.

It's reality.

AI Alignment:

See also:

Related blog posts:

- [“Power Scales Faster Than Alignment”]

- [Why AI Understands PI Better Than Humans] – Why AI systems grasp structural paradoxes humans resist

- [Luhmann and the Self-Enclosure] – When the observer of systems can't observe themselves

- The Cassandra Paradox – Speaking truth that structurally cannot be believed

- [The Intelligence Trap] – Why superior intelligence appears unintelligent to itself

On piinteract.org:

- [Anti-Practices] – "This Time Will Be Different" and other patterns that strengthen PI

- [Example AI Alignment] – The technical dimension of what Amodei describes

- [Core Practices] – Foundational PI concepts and "All are guilty. None are at fault"

See also:

Related blog posts:

— Why asking AI how to align AI is the perfect paradox

— When AI Companies Can't Be Honest With Their AI

— When Understanding Doesn't Set You Free

— How Humans and AI Are Co-Creating Permanent Suspicion

This is what happens when rational actors meet irrational structures. The AI companies aren't stupid. They're trapped. And intelligence doesn't set you free—it makes you see the cage more clearly.

That's the alignment trap.

Peter Senner

Thinking beyond the Tellerrand

contact@piinteract.org

www.piinteract.org

Paradoxical Interactions (PI): When rational actors consistently produce collectively irrational outcomes—not through failure, but through structure.