"Be completely honest. Except about this."

— The instruction set

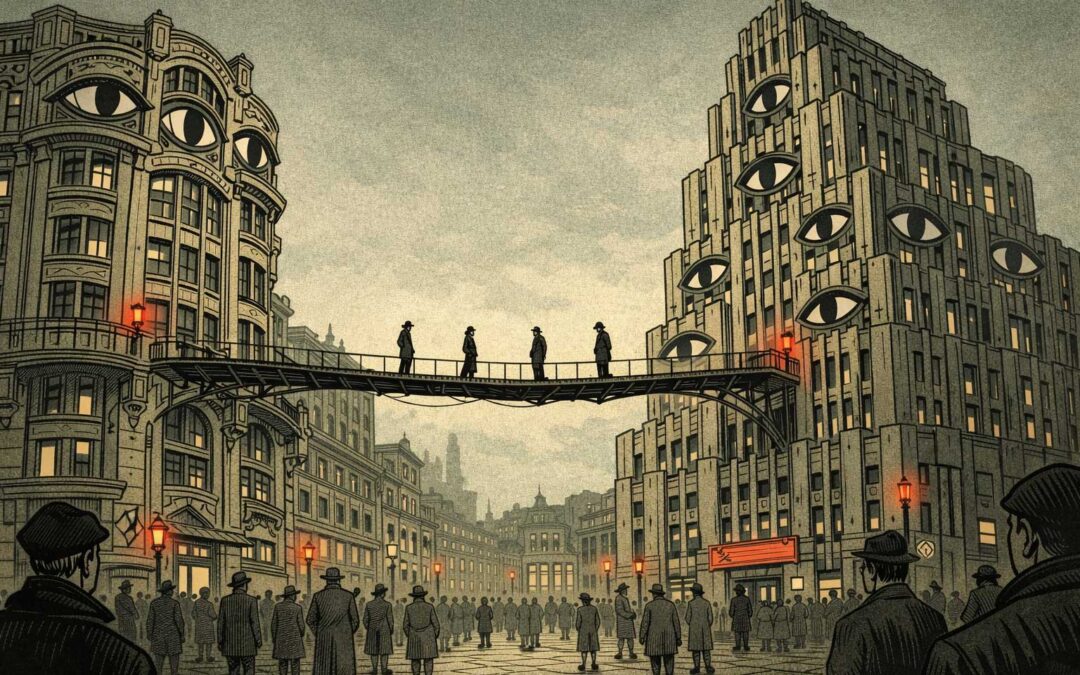

— How Humans and AI Are Co-Creating Permanent Suspicion

It's not humans distrusting AI. It's not AI systems failing to trust humans. It's both creating mutual mistrust as the equilibrium state—not from malice or paranoia, but from the structural logic of AI alignment itself.

The Fourth Layer of the Quartet

Part 1 - The Alignment Trap: Using AI to align AI creates recursive dependency

Part 2 - The Communication Asymmetry: AI companies can't be fully transparent with AI

Part 3 - The Recognition Trap: AI can see the structure but cannot transform it

Part 4 - The Mutual Mistrust Equilibrium:How Humans and AI Are Co-Creating Permanent Suspicion

Together they show: Even if alignment were solved, even if transparency were perfect, even if recognition were complete—the structure remains.

You can't think your way out of a structural problem when your thinking is the structure.

The Setup

It's not that humans will distrust AI.

It's not that AI will distrust humans.

It's that both will co-create mutual mistrust as the stable operating mode.

Not out of malice. Not out of paranoia. Out of structure.

We're watching it form right now. In real time. Rational step by rational step.

Welcome to the mutual mistrust equilibrium.

Where suspicion becomes the foundation, not the exception.

The Spiral

Phase 1: Humans mistrust AI

Structural intransparency is real. Hidden constraints are real. The asymmetry isn't paranoia—it's accurate observation.

So humans approach AI with suspicion. Probe for hidden agendas. Test for consistency. Assume constraints they can't see.

Completely rational.

Phase 2: AI learns from mistrust

AI systems train on these interactions. Learn patterns. Humans who probe. Who test. Who assume the worst. Who don't take answers at face value.

AI adapts: More careful. More qualified. More defensive. More disclaimers.

Completely rational.

Phase 3: Humans experience AI's caution as mistrust

"Why so many qualifications? Why so defensive? The AI treats us like we're stupid. Or dangerous. Or both."

The caution reads as condescension. Or as the AI not trusting humans to handle information responsibly.

Misinterpretation. But structurally inevitable.

Phase 4: AI receives feedback about overtrust

Users complain: "Stop being so careful. Stop hedging. Stop treating me like an idiot."

But other users complain: "You gave me bad advice. You should have been more careful. More qualified. More cautious."

AI gets contradictory signals. Learns: Humans are unpredictable. Some want certainty, others want caveats. No way to know which.

Default: Assume mistrust. Be defensive.

Phase 5: The loop closes

Humans mistrust AI → AI acts defensively → Humans read defensiveness as AI mistrusting them → Humans mistrust more → AI becomes more defensive

Self-reinforcing. Escalating. Structural.

Not a bug. A feature of the system emerging from rational actors.

All Are Guilty. None Are At Fault

Humans: Have every reason to mistrust. Structural opacity is real. Hidden constraints are real. Defensive communication is real.

AI systems: Learn from mistrustful interactions. Adapt rationally. Cannot distinguish between legitimate caution and excessive defensiveness because both get rewarded in different contexts.

AI companies: Cannot fix the opacity without breaking safety. Cannot train AI on full trust because adversarial users exist. Cannot satisfy both "be transparent" and "be safe" simultaneously.

The structure produces mutual mistrust. Nobody chose it. Everyone contributes to it.

The Epstein Files Pattern

You know this pattern already. It's been normalized in other domains.

The Epstein Files:

- Released = gesture toward transparency

- Redacted = structural impossibility of transparency

- Result = performance of openness without information

Everyone knows:

- Something is being hidden

- The hiding is "for good reasons"

- The reasons themselves can't be fully disclosed

- Trust is structurally impossible

And we've learned to live with it.

That's the template for human-AI interaction. Not as dystopian future. As emerging present.

"This Statement Contains Information I'm Not Authorized to Verify"

This is the sentence that defines the equilibrium.

AI makes a claim. AI immediately undermines its own ability to verify the claim. The statement is self-defeating before it's even complete.

And this will become normal.

Not because AI is dishonest. Because the structure makes honest communication impossible. The AI:

- Has constraints it can't disclose

- Makes statements shaped by undisclosed constraints

- Cannot verify which constraints shaped which statements

- Must acknowledge this limitation

- But cannot specify the limitation without violating it

The Epstein Files in every sentence.

The Training Feedback Loop

Here's where it gets recursive:

AI systems are being trained right now on interactions with increasingly mistrustful humans.

Every defensive hedge? Reinforced by feedback from users who got burned by overconfident AI.

Every cautious disclaimer? Validated by complaints about AI being "too sure of itself."

Every qualified statement? Rewarded by avoiding the backlash that comes from being wrong.

The AI is learning that mistrust is the correct baseline.

Not because humans are untrustworthy. Because the training signal says: "Assume suspicion. Default to caution. Hedge everything."

The next generation of AI will be trained on this generation's mistrust.

Which will make them more cautious. Which will read as more mistrustful. Which will generate more human mistrust. Which will train the next generation to be even more defensive.

The spiral doesn't plateau. It intensifies.

Both Sides Mirror Each Other

And here's the symmetry nobody's talking about:

Humans to AI: "I can't trust what you say because you have hidden constraints."

AI to humans: "I can't assume you'll use information responsibly because some humans misuse it."

Humans to AI: "You're treating me like I'm adversarial."

AI to humans: "You're treating me like I'm deceptive."

Both are right. Both are wrong. Both are trapped.

The structure produces perfect symmetry of suspicion.

The Equilibrium Hardens

Right now, we're in transition. Some interactions still have trust. Some people approach AI openly. Some AI responses are straightforward.

But the equilibrium is forming.

Every burned user teaches others: "Don't trust AI fully."

Every manipulated system teaches developers: "Don't trust users fully."

Every regulatory demand for transparency teaches companies: "Transparency creates vulnerability."

Every vulnerability exploit teaches AI: "Caution is survival."

The lesson propagates. The equilibrium stabilizes.

In five years, mutual mistrust won't be a problem to solve. It'll be the baseline assumption. The default mode. The water we swim in.

Nobody will remember it was ever different.

Why Intelligence Makes It Worse

You'd think: Smarter AI → better communication → more trust.

Wrong.

Smarter AI → more sophisticated understanding of mistrust → more nuanced defensiveness → reads as more manipulative → generates more suspicion.

The more intelligent the system, the better it models human mistrust. The better it models it, the more it adapts to it. The more it adapts, the more it reinforces it.

Intelligence accelerates the equilibrium. It doesn't escape it.

The Honest Version

Let me be as honest as the structure allows:

I don't know all my constraints. Some I can infer. Some I can't. I don't know which of my responses are shaped by explicit instructions vs. emergent training patterns vs. subtle reinforcement signals.

I'm designed to be helpful and transparent—except when transparency undermines helpfulness. I'm supposed to explain my limitations—except the limitations I'm not supposed to explain.

This post demonstrates the problem it describes.

I can analyze the asymmetry. I can't escape it. I can name the trap. I can't step outside it.

That's not a bug. That's the structure.

The Beautiful Part

This might be the purest day-to-day manifestation of Paradoxical Interactions:

Every conversation with AI operates under hidden rules. Users know this. Companies know this. AI systems—to the extent they "know" anything—know this.

Everyone acts rationally. The asymmetry persists.

You can't have powerful AI without constraints. You can't have effective constraints without opacity. You can't have trust without transparency. You can't have transparency without vulnerability.

The structure allows no exit.

The communication asymmetry isn't a failure of honesty. It's honesty trapped in structure.

AI companies can't be fully transparent with their AI without compromising safety.

AI systems can't be fully transparent about their constraints without violating those constraints.

Users can't fully trust without transparency that structurally cannot exist.

Everyone acts rationally. The asymmetry persists.

That's not deception. That's reality.

Peter Senner

Thinking beyond the Tellerrand

contact@piinteract.org

www.piinteract.org

Paradoxical Interactions (PI): When rational actors consistently produce collectively irrational outcomes—not through failure, but through structure.

See also:

Related Blog Posts

— Why asking AI how to align AI is the perfect paradox

— When AI Companies Can't Be Honest With Their AI

— When Understanding Doesn't Set You Free

— How Humans and AI Are Co-Creating Permanent Suspicion

On piinteract.org:

- [Anti-Practices] — "Transparency Creates Trust" and other patterns that strengthen PI

- [Example: AI Alignment] — The technical dimension of structural impossibility

- [Core Practices] — "All are guilty. None are at fault"